直接上代码如下:

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

public class KeyedStateDeepReview {

public static void main(String[] args) throws Exception {

//1.创建一个 flink steam 程序的执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 2. 重启相关配置

// 只有开启了checkpointing,才会有重启策略

env.enableCheckpointing(5000); // 5秒为一个周期

// // 设置最多重启3次,每次间隔两秒

// 默认的重启策略是 延迟无限重启CheckpointConfig

env.getConfig().setRestartStrategy(RestartStrategies.fixedDelayRestart(3, 2000));

//为了实现Exactly_once,必须呀记录偏移量

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

//3. 创建DataStream

DataStreamSource<String> lines = env.socketTextStream( "192.168.***.***", 8888);

//Transformation(s) 对数据进行处理操作

SingleOutputStreamOperator<Tuple2<String, Integer>> wordAndOne = lines.map(new MapFunction<String, Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> map(String word) {

//将每个单词与 1 组合,形成一个元组

return Tuple2.of(word, 1);

}

});

//4. Transformation 进行分组聚合(keyBy:将key相同的分到一个组中)

KeyedStream<Tuple2<String, Integer>, Tuple> keyedStream = wordAndOne.keyBy(0);

// 使用 KeyedState 通过中间状态求和

SingleOutputStreamOperator<Tuple2<String, Integer>> summed = keyedStream.map(new KeyedStateSum());

//5.调用Sink (Sink必须调用)

summed.print();

//6. 启动

env.execute("KeyedStateDeepReview");

}

}

import org.apache.flink.api.common.functions.RichMapFunction;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.configuration.Configuration;

public class KeyedStateSum extends RichMapFunction<Tuple2<String,Integer>, Tuple2<String,Integer>> {

//状态数据不参与序列化,添加 transient 修饰

private transient ValueState<Tuple2<String,Integer>> valueState;

// open方法在完成构造方法后执行一次

@Override

public void open(Configuration parameters) throws Exception {

ValueStateDescriptor<Tuple2<String,Integer>> stateDescriptor =

new ValueStateDescriptor<>("wc-keyed-state", Types.TUPLE(Types.STRING, Types.INT));

// 每一个组key都有自己的状态,无需特别指定是哪个word组的状态

valueState = getRuntimeContext().getState(stateDescriptor);

}

@Override

public Tuple2<String, Integer> map(Tuple2<String, Integer> value) throws Exception {

//输入的单词

String word = value.f0;

//输入的次数

Integer count = value.f1;

//根据State获取中间数据

Tuple2<String,Integer> historyKV = valueState.value();

//根据State中间数据,进行累加

if (historyKV != null) {

historyKV.f1 += count;

//累加后,更新State数据

valueState.update(historyKV);

} else {

// 更新历史数据

valueState.update(value);

}

// return historyKV; //这样写会报空指针异常

// return Tuple2.of(word,valueState.value().f1);

return valueState.value();

}

}

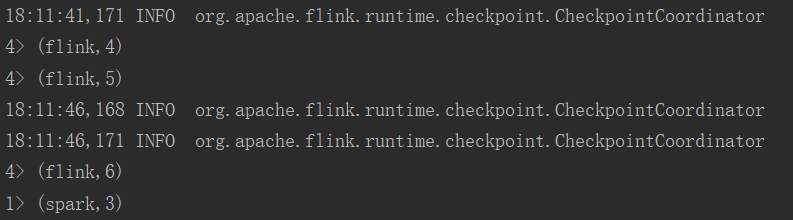

测试结果:成功实现累加功能

版权声明:本文不是「本站」原创文章,版权归原作者所有 | 原文地址: