一、前言

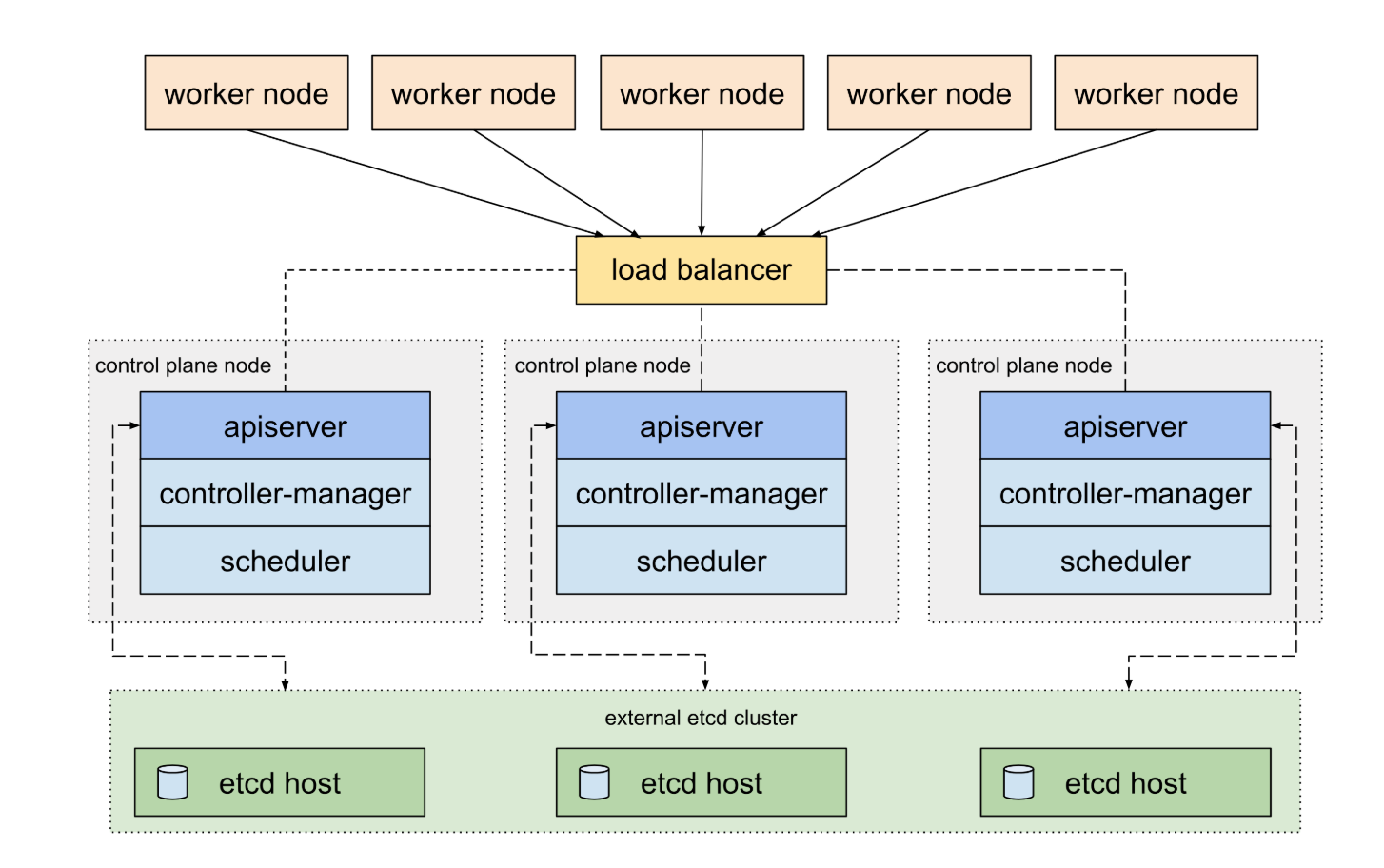

高可用的Kubernetes集群会有3个master节点,运行3个APIServer实例,这三个APIServer实例可以挂载再同一个负载均衡器后端,实现高可用。

转载自https://blog.csdn.net/cloudvtech

二、测试集群主机准备

2.1 测试环境主机角色分配

3 kubernetes masters

192.168.56.101 (k8s-master-01)

192.168.56.102 (k8s-master-02)

192.168.56.103 (k8s-master-03)

1 kubernetes node

192.168.56.111 (k8s-node-01)

1 load balancer for 3 API servers

192.168.56.100

1 etcd cluster with 3 etcd servers running in 192.168.56.101/102/103

etcd集群的安装参看文章《Kubernetes生产实践系列之四:基于虚拟机部署安全加密的etcd集群》

2.2 安全和DNS准备

systemctl stop firewalld

systemctl disable firewalld

SELINUX=disabled

setenforce 0

/etc/ssh/sshd_config

UseDNS no

2.3 集群证书准备

k8s-root-ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 4096

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "k8s",

"OU": "System"

}

]

}

k8s-gencert.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

kubernetes-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"10.254.0.1",

"192.168.56.101",

"192.168.56.100",

"192.168.56.104",

"192.168.56.105",

"192.168.56.106",

"192.168.56.102",

"192.168.56.103",

"192.168.56.111",

"192.168.56.112",

"192.168.56.113",

"192.168.56.114",

"192.168.56.115",

"192.168.56.116",

"192.168.56.117",

"localhost",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"k8s-master-01",

"k8s-master-02",

"k8s-master-03",

"k8s-master-04",

"k8s-master-05",

"k8s-master-06",

"k8s-node-01",

"k8s-node-02",

"k8s-node-03",

"k8s-node-04",

"k8s-node-05",

"k8s-node-06",

"k8s-node-07"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "k8s",

"OU": "System"

}

]

}

kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "k8s",

"OU": "System"

}

]

}

生成不同组建(kubernetes,admin,kube-proxy)使用的证书和key

cfssl gencert --initca=true k8s-root-ca-csr.json | cfssljson --bare k8s-root-ca

for targetName in kubernetes admin kube-proxy; do

cfssl gencert --ca k8s-root-ca.pem --ca-key k8s-root-ca-key.pem --config k8s-gencert.json --profile kubernetes $targetName-csr.json | cfssljson --bare $targetName

done

openssl x509 -in kubernetes.pem -text

[root@k8s-tools k8s-ca]# ls -l

-rw-r--r--. 1 root root 1013 Oct 31 00:31 admin.csr

-rw-r--r--. 1 root root 231 Oct 30 02:56 admin-csr.json

-rw-------. 1 root root 1675 Oct 31 00:31 admin-key.pem

-rw-r--r--. 1 root root 1753 Oct 31 00:31 admin.pem

-rw-r--r--. 1 root root 292 Oct 30 02:53 k8s-gencert.json

-rw-r--r--. 1 root root 1695 Oct 31 00:31 k8s-root-ca.csr

-rw-r--r--. 1 root root 210 Oct 30 02:53 k8s-root-ca-csr.json

-rw-------. 1 root root 3243 Oct 31 00:31 k8s-root-ca-key.pem

-rw-r--r--. 1 root root 2057 Oct 31 00:31 k8s-root-ca.pem

-rw-r--r--. 1 root root 1013 Oct 31 00:31 kube-proxy.csr

-rw-r--r--. 1 root root 232 Oct 30 02:56 kube-proxy-csr.json

-rw-------. 1 root root 1679 Oct 31 00:31 kube-proxy-key.pem

-rw-r--r--. 1 root root 1753 Oct 31 00:31 kube-proxy.pem

-rw-r--r--. 1 root root 1622 Oct 31 00:31 kubernetes.csr

-rw-r--r--. 1 root root 1202 Oct 31 00:31 kubernetes-csr.json

-rw-------. 1 root root 1675 Oct 31 00:31 kubernetes-key.pem

-rw-r--r--. 1 root root 2334 Oct 31 00:31 kubernetes.pem

2.4 生成token和kubeconfig

要确保生成的kubeconfig里面带有APIServer 前端LB的地址

server: https://192.168.56.100:6443

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

每个节点kubelet连接APIServer LB使用的bootstrap.kubeconfig

export KUBE_APISERVER="https://192.168.56

版权声明:本文不是「本站」原创文章,版权归原作者所有 | 原文地址: