部署Alertmanager实现邮件/钉钉/微信报警

https://www.qikqiak.com/k8s-book/docs/57.AlertManager%E7%9A%84%E4%BD%BF%E7%94%A8.html

https://www.cnblogs.com/xiangsikai/p/11433276.html

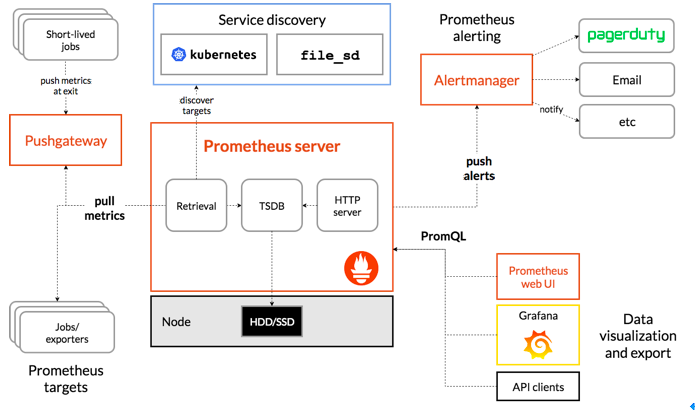

1 简介

Alertmanager 主要用于接收 Prometheus 发送的告警信息,它支持丰富的告警通知渠道,而且很容易做到告警信息进行去重,降噪,分组等,是一款前卫的告警通知系统。

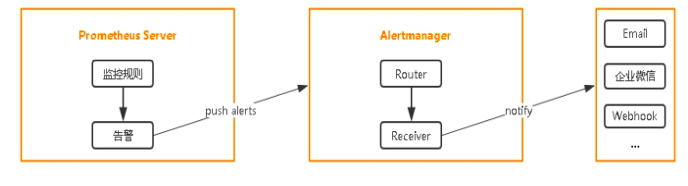

2 设置告警和通知的主要步骤如下:

一、部署Alertmanager

二、配置Prometheus与Alertmanager通信

三、配置告警

1. prometheus指定rules目录

2. configmap存储告警规则

3. configmap挂载到容器rules目录

3 部署Alertmanager

3.1 使用的自动PV存储alertmanager-pvc.yaml

[root@k8s-master alertmanager]# cat alertmanager-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: alertmanager

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "2Gi"

[root@k8s-master alertmanager]# kubectl get pv,pvc -n kube-system | grep alert

persistentvolume/kube-system-alertmanager-pvc-f36ec996-fdd3-4cdd-9735-423c5af1a8c9 2Gi RWO Delete Bound kube-system/alertmanager managed-nfs-storage 4m57s

persistentvolumeclaim/alertmanager Bound kube-system-alertmanager-pvc-f36ec996-fdd3-4cdd-9735-423c5af1a8c9 2Gi RWO managed-nfs-storage 4m57s

[root@k8s-master alertmanager]#

3.2 存储主配置文件-配置告警发送信息alertmanager-configmap.yaml

[root@k8s-master alertmanager]# cat alertmanager-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

# 配置文件名称

name: alertmanager-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

alertmanager.yml: |

global:

resolve_timeout: 5m

# 告警自定义邮件

smtp_smarthost: 'smtp.163.com:25'

smtp_from: 'w.jjwx@163.com'

smtp_auth_username: 'w.jjwx@163.com'

smtp_auth_password: '密码'

receivers:

- name: default-receiver

email_configs:

- to: "314144952@qq.com"

route:

group_interval: 1m

group_wait: 10s

receiver: default-receiver

repeat_interval: 1m

[root@k8s-master alertmanager]# kubectl apply -f alertmanager-configmap.yaml

configmap/alertmanager-config created

[root@k8s-master alertmanager]#

[root@k8s-master alertmanager]# kubectl get cm -n kube-system| grep alert

alertmanager-config 1 12m

3.3 部署核心组件alertmanager-deployment.yaml(不需要修改)

[root@k8s-master alertmanager]# cat alertmanager-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: kube-system

labels:

k8s-app: alertmanager

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v0.14.0

spec:

replicas: 1

selector:

matchLabels:

k8s-app: alertmanager

version: v0.14.0

template:

metadata:

labels:

k8s-app: alertmanager

version: v0.14.0

spec:

priorityClassName: system-cluster-critical

containers:

- name: prometheus-alertmanager

image: "prom/alertmanager:v0.14.0"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/alertmanager.yml

- --storage.path=/data

- --web.external-url=/

ports:

- containerPort: 9093

readinessProbe:

httpGet:

path: /#/status

port: 9093

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: storage-volume

mountPath: "/data"

subPath: ""

resources:

limits:

cpu: 10m

memory: 50Mi

requests:

cpu: 10m

memory: 50Mi

- name: prometheus-alertmanager-configmap-reload

image: "jimmidyson/configmap-reload:v0.1"

imagePullPolicy: "IfNotPresent"

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9093/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

volumes:

- name: config-volume

configMap:

name: alertmanager-config

- name: storage-volume

persistentVolumeClaim:

claimName: alertmanager

[root@k8s-master alertmanager]# kubectl get deploy,pod -n kube-system| grep alert

deployment.extensions/alertmanager 1/1 1 1 9m21s

pod/alertmanager-6778cc5b7c-2gc9v 2/2 Running 0 9m21s

[root@k8s-master alertmanager]#

3.4 暴露ServicePort端口

[root@k8s-master alertmanager]# cat alertmanager-service.yaml

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Alertmanager"

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 9093

selector:

k8s-app: alertmanager

type: "ClusterIP"

type: "NodePort"

[root@k8s-master alertmanager]# kubectl get svc -n kube-system| grep alert

alertmanager NodePort 10.111.52.119 <none> 80:32587/TCP 18h

[root@k8s-master alertmanager]#

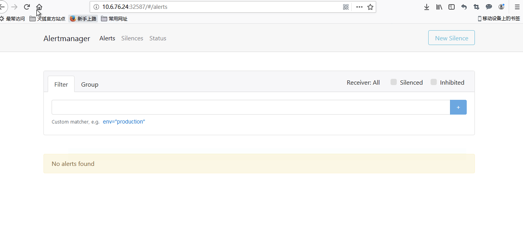

3.5 alertmanager控制台

在这个页面中我们可以进行一些操作,比如过滤、分组等等,里面还有两个新的概念:Inhibition(抑制)和 Silences(静默)。

- Inhibition:如果某些其他警报已经触发了,则对于某些警报,Inhibition 是一个抑制通知的概念。例如:一个警报已经触发,它正在通知整个集群是不可达的时,Alertmanager 则可以配置成关心这个集群的其他警报无效。这可以防止与实际问题无关的数百或数千个触发警报的通知,Inhibition 需要通过上面的配置文件进行配置。

- Silences:静默是一个非常简单的方法,可以在给定时间内简单地忽略所有警报。Silences 基于 matchers配置,类似路由树。来到的警告将会被检查,判断它们是否和活跃的 Silences 相等或者正则表达式匹配。如果匹配成功,则不会将这些警报发送给接收者。

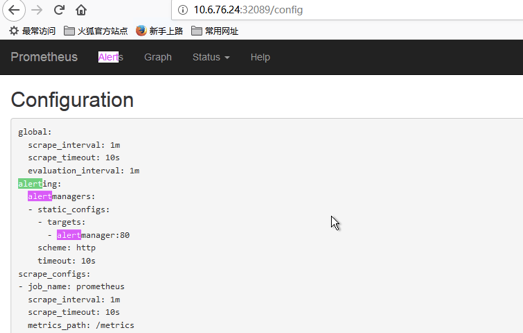

4 配置Prometheus与Alertmanager通信

编辑prometheus-configmap.yaml 配置文件添加绑定信息

最后的alert模块修改一下,之前的都注释

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager:80"] ####需要修改alertmanger服务名字,集群内部通过服务名调用

应用加载配置文件

kubectl apply -f prometheus-configmap.yaml

web控制台查看配置是否生效

5 配置告警

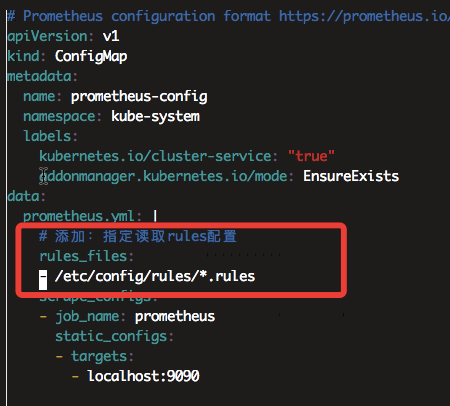

5.1 prometheus指定rules目录

编辑prometheus-configmap.yaml 添加报警信息

# 添加:指定读取rules配置

rules_files:

- /etc/config/rules/*.rules

生效配置文件

kubectl apply -f prometheus-configmap.yaml

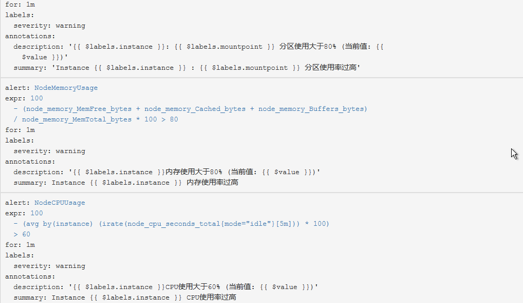

5.2 configmap存储告警规则

创建yaml文件同过configmap存储告警规则

#vim prometheus-rules.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-rules

namespace: kube-system

data:

# 通用角色

general.rules: |

groups:

- name: general.rules

rules:

- alert: InstanceDown

expr: up == 0

for: 1m

labels:

severity: error

annotations:

summary: "Instance {{ $labels.instance }} 停止工作"

description: "{{ $labels.instance }} job {{ $labels.job }} 已经停止5分钟以上."

# Node对所有资源的监控

node.rules: |

groups:

- name: node.rules

rules:

- alert: NodeFilesystemUsage

expr: 100 - (node_filesystem_free_bytes{fstype=~"ext4|xfs"} / node_filesystem_size_bytes{fstype=~"ext4|xfs"} * 100) > 80

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} : {{ $labels.mountpoint }} 分区使用率过高"

description: "{{ $labels.instance }}: {{ $labels.mountpoint }} 分区使用大于80% (当前值: {{ $value }})"

- alert: NodeMemoryUsage

expr: 100 - (node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes) / node_memory_MemTotal_bytes * 100 > 80

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} 内存使用率过高"

description: "{{ $labels.instance }}内存使用大于80% (当前值: {{ $value }})"

- alert: NodeCPUUsage

expr: 100 - (avg(irate(node_cpu_seconds_total{mode="idle"}[5m])) by (instance) * 100) > 60

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} CPU使用率过高"

description: "{{ $labels.instance }}CPU使用大于60% (当前值: {{ $value }})"

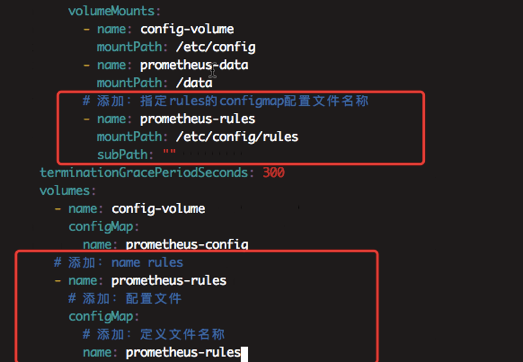

5.3 configmap挂载到容器rules目录

修改挂载点位置,使用之前部署的prometheus动态PV

#vim prometheus-statefulset.yaml

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: prometheus-data

mountPath: /data

# 添加:指定rules的configmap配置文件名称

- name: prometheus-rules

mountPath: /etc/config/rules

subPath: ""

terminationGracePeriodSeconds: 300

volumes:

- name: config-volume

configMap:

name: prometheus-config

# 添加:name rules

- name: prometheus-rules

# 添加:配置文件

configMap:

# 添加:定义文件名称

name: prometheus-rules

创建configmap并更新PV

kubectl apply -f prometheus-rules.yaml

#如果prometheus-statefulse更新失败,可以先删除

#kubectl delete -f prometheus-statefulset.yaml

kubectl apply -f prometheus-statefulset.yaml

5.4 完整的prometheus配置文件

自动pvc

[root@k8s-master prometheus]# cat /etc/exports

/data/volumes/v1 10.6.76.0/24(rw,no_root_squash)

/data/volumes/v2 10.6.76.0/24(rw,no_root_squash)

/data/volumes/v3 10.6.76.0/24(rw,no_root_squash)

[root@k8s-master prometheus]#

prometheus-rules.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-rules

namespace: kube-system

data:

# 通用角色

general.rules: |

groups:

- name: general.rules

rules:

- alert: InstanceDown

expr: up == 0

for: 1m

labels:

severity: error

annotations:

summary: "Instance {{ $labels.instance }} 停止工作"

description: "{{ $labels.instance }} job {{ $labels.job }} 已经停止5分钟以上."

# Node对所有资源的监控

node.rules: |

groups:

- name: node.rules

rules:

- alert: NodeFilesystemUsage

expr: 100 - (node_filesystem_free_bytes{fstype=~"ext4|xfs"} / node_filesystem_size_bytes{fstype=~"ext4|xfs"} * 100) > 80

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} : {{ $labels.mountpoint }} 分区使用率过高"

description: "{{ $labels.instance }}: {{ $labels.mountpoint }} 分区使用大于80% (当前值: {{ $value }})"

- alert: NodeMemoryUsage

expr: 100 - (node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes) / node_memory_MemTotal_bytes * 100 > 80

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} 内存使用率过高"

description: "{{ $labels.instance }}内存使用大于80% (当前值: {{ $value }})"

- alert: NodeCPUUsage

expr: 100 - (avg(irate(node_cpu_seconds_total{mode="idle"}[5m])) by (instance) * 100) > 60

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} CPU使用率过高"

description: "{{ $labels.instance }}CPU使用大于60% (当前值: {{ $value }})"

prometheus-rules.yaml

prometheus-rbac.yaml

apiVersion: v1

# 创建 ServiceAccount 授予权限

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: prometheus

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

# 授予的权限

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

verbs:

- get

- nonResourceURLs:

- "/metrics"

verbs:

- get

---

# 角色绑定

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: kube-system

prometheus-rbac.yaml

prometheus-service.yaml

kind: Service

apiVersion: v1

metadata:

name: prometheus

namespace: kube-system

labels:

kubernetes.io/name: "Prometheus"

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

type: NodePort

ports:

- name: http

port: 9090

protocol: TCP

targetPort: 9090

selector:

k8s-app: Prometheus

prometheus-service.yaml

prometheus-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

prometheus.yml: |

rule_files:

- /etc/config/rules/*.rules

scrape_configs:

- job_name: prometheus

static_configs:

- targets:

- localhost:9090

#

# - job_name: kubernetes-nodes

# scrape_interval: 30s

# static_configs:

# - targets:

# - 10.6.76.23:9100

# - 10.6.76.24:9100

# - 10.6.76.25:9100

- job_name: kubernetes-apiservers

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: default;kubernetes;https

source_labels:

- __meta_kubernetes_namespace

- __meta_kubernetes_service_name

- __meta_kubernetes_endpoint_port_name

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-nodes-kubelet

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-nodes-cadvisor

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __metrics_path__

replacement: /metrics/cadvisor

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-service-endpoints

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- job_name: kubernetes-services

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module:

- http_2xx

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_probe

- source_labels:

- __address__

target_label: __param_target

- replacement: blackbox

target_label: __address__

- source_labels:

- __param_target

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager:80"]

prometheus-configmap.yaml

prometheus-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: prometheus

namespace: kube-system

labels:

k8s-app: prometheus

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v2.2.1

spec:

serviceName: "prometheus"

replicas: 1

podManagementPolicy: "Parallel"

updateStrategy:

type: "RollingUpdate"

selector:

matchLabels:

k8s-app: prometheus

template:

metadata:

labels:

k8s-app: prometheus

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

serviceAccountName: prometheus

initContainers:

- name: "init-chown-data"

image: "busybox:latest"

imagePullPolicy: "IfNotPresent"

command: ["chown", "-R", "65534:65534", "/data"]

volumeMounts:

- name: prometheus-data

mountPath: /data

subPath: ""

containers:

- name: prometheus-server-configmap-reload

image: "jimmidyson/configmap-reload:v0.1"

imagePullPolicy: "IfNotPresent"

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9090/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

- name: prometheus-server

image: "prom/prometheus:v2.2.1"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/prometheus.yml

- --storage.tsdb.path=/data

- --web.console.libraries=/etc/prometheus/console_libraries

- --web.console.templates=/etc/prometheus/consoles

- --web.enable-lifecycle

ports:

- containerPort: 9090

readinessProbe:

httpGet:

path: /-/ready

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

livenessProbe:

httpGet:

path: /-/healthy

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

# based on 10 running nodes with 30 pods each

resources:

limits:

cpu: 200m

memory: 1000Mi

requests:

cpu: 200m

memory: 1000Mi

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: prometheus-data

mountPath: /data

- name: prometheus-rules

mountPath: /etc/config/rules

subPath: ""

terminationGracePeriodSeconds: 300

volumes:

- name: config-volume

configMap:

name: prometheus-config

- name: prometheus-rules

configMap:

name: prometheus-rules

volumeClaimTemplates:

- metadata:

name: prometheus-data

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "16Gi"

prometheus-statefulset.yaml

查看服务启动情况

[root@k8s-master prometheus]# kubectl get pv,pvc,cm,pod,svc -n kube-system |grep prometheus

persistentvolume/kube-system-prometheus-data-prometheus-0-pvc-939cfe8c-427d-4731-91f3-b146dd8f61e2 16Gi RWO Delete Bound kube-system/prometheus-data-prometheus-0 managed-nfs-storage 2m14s

persistentvolumeclaim/prometheus-data-prometheus-0 Bound kube-system-prometheus-data-prometheus-0-pvc-939cfe8c-427d-4731-91f3-b146dd8f61e2 16Gi RWO managed-nfs-storage 2m15s

configmap/prometheus-config 1 2m15s

configmap/prometheus-rules 2 2m15s

pod/prometheus-0 2/2 Running 0 2m15s

service/prometheus NodePort 10.97.213.127 <none> 9090:32281/TCP 2m15s

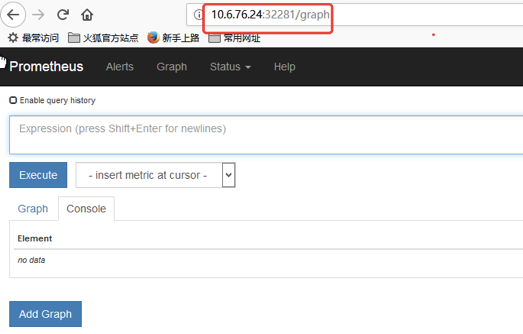

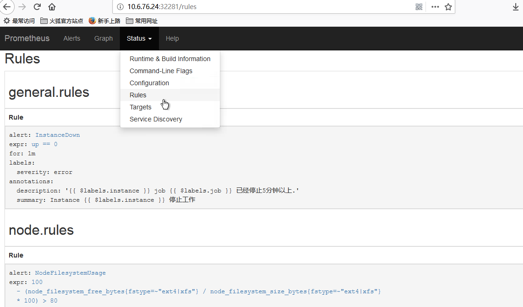

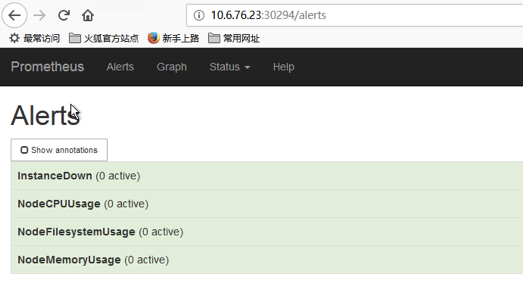

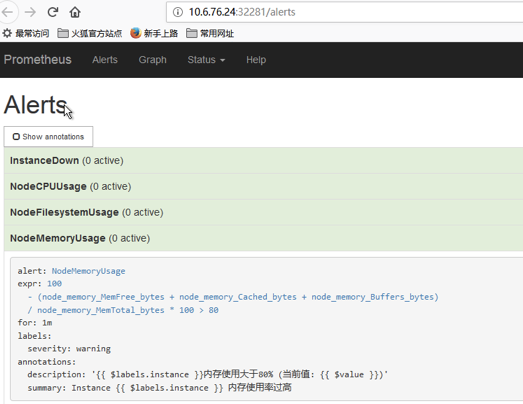

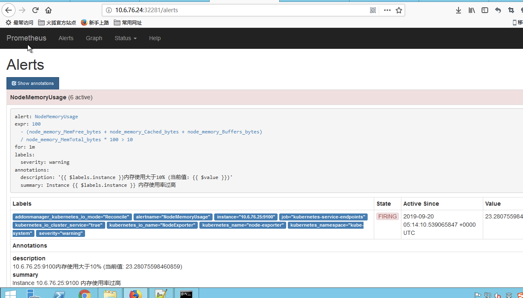

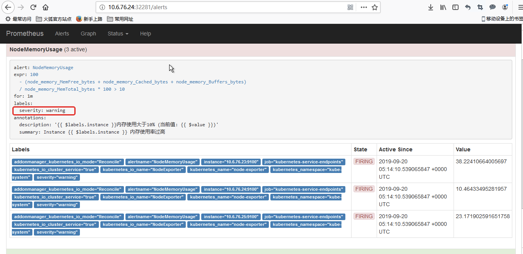

5.5 访问Prometheus, 查看是否有alerts告警规则

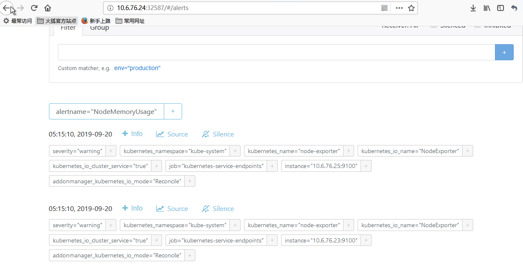

5.6 访问alerts管理后台

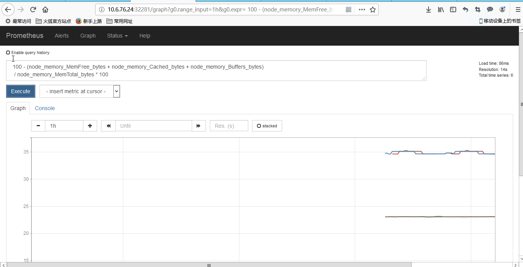

5.7 测试内存邮件报警

我们的内存阈值设置是80%

我们在prometheus上看基本上也就20%吧

我们把他改成10%

[root@k8s-master prometheus]# vim prometheus-rules.yaml

- alert: NodeMemoryUsage

expr: 100 - (node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes) / node_memory_MemTotal_bytes * 100 > 10

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} 内存使用率过高"

description: "{{ $labels.instance }}内存使用大于10% (当前值: {{ $value }})"

[root@k8s-master prometheus]# kubectl apply -f prometheus-rules.yaml

configmap/prometheus-rules configured

#竟然不能热加载….可能是我太着急了,这个需要5分钟吧

[root@k8s-master prometheus]# kubectl delete -f prometheus-statefulset.yaml

statefulset.apps "prometheus" deleted

[root@k8s-master prometheus]# kubectl apply -f prometheus-statefulset.yaml

statefulset.apps/prometheus created

[root@k8s-master prometheus]#

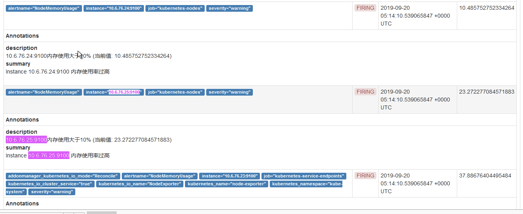

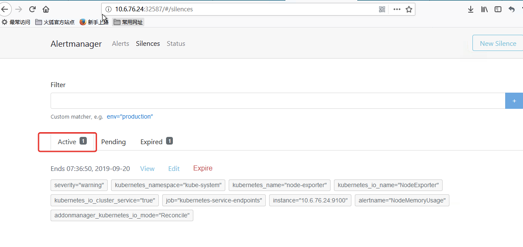

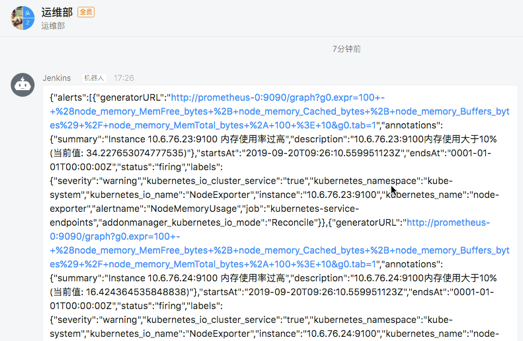

出现报警

我们可以看到页面中出现了我们刚刚定义的报警规则信息,而且报警信息中还有状态显示。一个报警信息在生命周期内有下面3种状态:

- inactive: 表示当前报警信息既不是firing状态也不是pending状态

- pending: 表示在设置的阈值时间范围内被激活了

- firing: 表示超过设置的阈值时间被激活了

粉红色其实已经将告警推送给Alertmanager了,也就是这个状态下才去发送这个告警信息

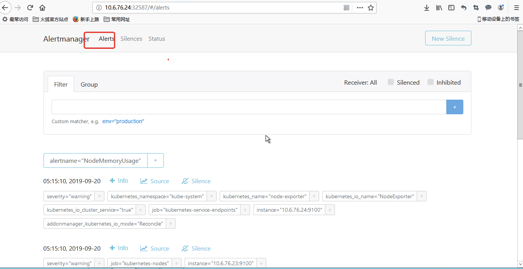

alertmanager上也能看到

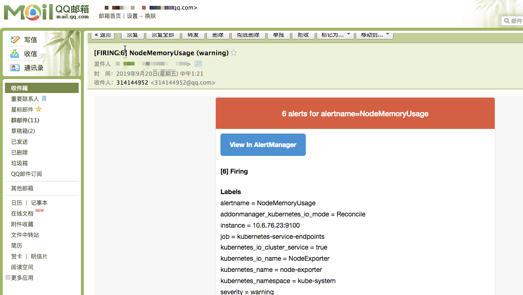

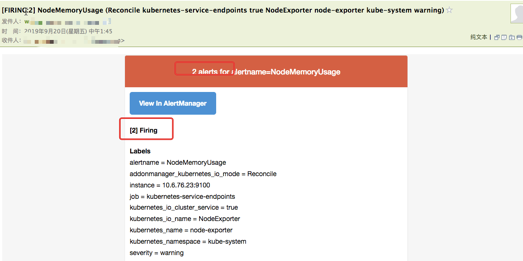

QQ邮箱 报警邮件是合并的 ,这个不错

但是我只有一个master两个node结果报警出来6条

猜一下,这个地方

[root@k8s-master prometheus]# vim prometheus-configmap.yaml

#- job_name: kubernetes-nodes

# scrape_interval: 30s

# static_configs:

# - targets:

# - 10.6.76.23:9100

# - 10.6.76.24:9100

# - 10.6.76.25:9100

[root@k8s-master prometheus]# kubectl apply -f prometheus-configmap.yaml

configmap/prometheus-config configured

果然,网友也很坑啊!!前面的配置文件修改了,不会遇到这个坑了

|

3 alerts for alertname=NodeMemoryUsage |

|||||

|

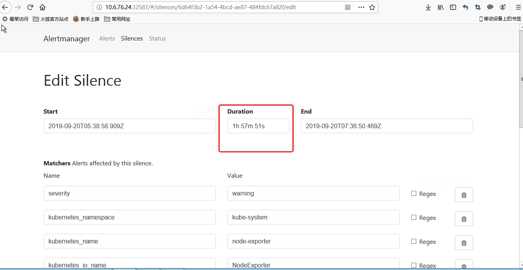

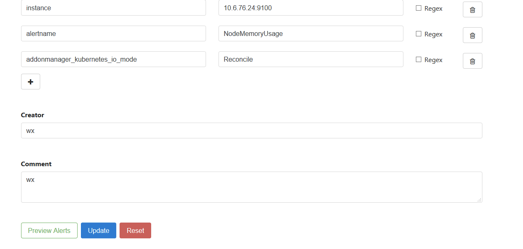

我们把其中一条报警设置静音,看起来默认是2小时

过了5分钟

Prometheus还是3个报警

但alertmanager已经处理了,报警只发出2个

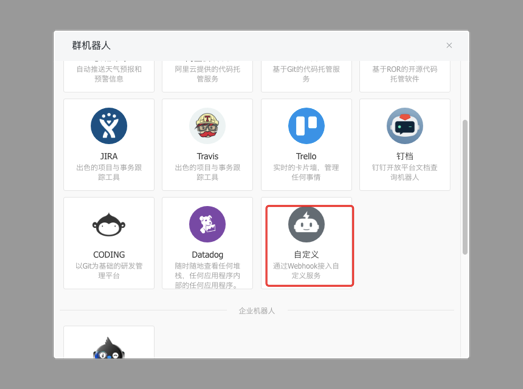

5.8 钉钉报警

https://www.qikqiak.com/k8s-book/docs/57.AlertManager%E7%9A%84%E4%BD%BF%E7%94%A8.html

上面我们配置的是 AlertManager 自带的邮件报警模板,我们也说了 AlertManager 支持很多中报警接收器,比如 slack、微信之类的,其中最为灵活的方式当然是使用 webhook 了,我们可以定义一个 webhook 来接收报警信息,然后在 webhook 里面去进行处理,需要发送怎样的报警信息我们自定义就可以。

准备钉钉机器人

悲催的是赶上钉钉升级,机器人新建不了,我们用之前的Jenkins留下的

配置报警文件

大家可以根据自己的需求来定制报警数据, github.com/cnych/alertmanager-dingtalk-hook

对应的资源清单如下:(dingtalk-hook.yaml)

#cat dingtalk-hook.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: dingtalk-hook

namespace: kube-system

spec:

template:

metadata:

labels:

app: dingtalk-hook

spec:

containers:

- name: dingtalk-hook

image: cnych/alertmanager-dingtalk-hook:v0.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 5000

name: http

env:

- name: ROBOT_TOKEN

valueFrom:

secretKeyRef:

name: dingtalk-secret

key: token

resources:

requests:

cpu: 50m

memory: 100Mi

limits:

cpu: 50m

memory: 100Mi

---

apiVersion: v1

kind: Service

metadata:

name: dingtalk-hook

namespace: kube-system

spec:

selector:

app: dingtalk-hook

ports:

- name: hook

port: 5000

targetPort: http

要注意上面我们声明了一个 ROBOT_TOKEN 的环境变量,由于这是一个相对于私密的信息,所以我们这里从一个 Secret 对象中去获取,通过如下命令创建一个名为 dingtalk-secret 的 Secret 对象,然后部署上面的资源对象即可:

[root@k8s-master alertmanager]# kubectl create secret generic dingtalk-secret --from-literal=token=17549607d838b3015d183384ffe53333b13df0a98563150df241535808e10781 -n kube-system

secret/dingtalk-secret created

[root@k8s-master alertmanager]# kubectl create -f dingtalk-hook.yaml

deployment.extensions/dingtalk-hook created

service/dingtalk-hook created

[root@k8s-master alertmanager]#

[root@k8s-master alertmanager]# kubectl get pod,deploy -n kube-system | grep dingtalk

pod/dingtalk-hook-686ddd6976-tp9g4 1/1 Running 0 3m18s

deployment.extensions/dingtalk-hook 1/1 1 1 3m18s

[root@k8s-master alertmanager]#

部署成功后,现在我们就可以给 AlertManager 配置一个 webhook 了,在上面的配置中增加一个路由接收器

#cat alertmanager-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

# 配置文件名称

name: alertmanager-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

alertmanager.yml: |

global:

resolve_timeout: 5m

# 告警自定义邮件

smtp_smarthost: 'smtp.163.com:25'

smtp_from: 'w.jjwx@163.com'

smtp_auth_username: 'w.jjwx@163.com'

smtp_auth_password: '密码'

smtp_hello: '163.com'

smtp_require_tls: false

route:

# 这里的标签列表是接收到报警信息后的重新分组标签,例如,接收到的报警信息里面有许多具有 cluster=A 和 alertname=LatncyHigh 这样的标签的报警信息将会批量被聚合到一个分组里面

group_by: ['job','alertname','severity']

# 当一个新的报警分组被创建后,需要等待至少group_wait时间来初始化通知,这种方式可以确保您能有足够的时间为同一分组来获取多个警报,然后一起触发这个报警信息。

group_wait: 30s

group_interval: 5m

# 当第一个报警发送后,等待'group_interval'时间来发送新的一组报警信息。

repeat_interval: 12h

# 如果一个报警信息已经发送成功了,等待'repeat_interval'时间来重新发送他们

#group_interval: 5m

#repeat_interval: 12h

receiver: default #默认的receiver:如果一个报警没有被一个route匹配,则发送给默认的接收器

routes:

- receiver: webhook

match:

alertname: NodeMemoryUsage #匹配这个内存报警

receivers:

- name: 'default'

email_configs:

- to: '314144952@qq.com'

send_resolved: true

- name: 'webhook'

webhook_configs:

- url: 'http://dingtalk-hook:5000'

send_resolved: true

部分参数说明

route:

# 这里的标签列表是接收到报警信息后的重新分组标签,例如,接收到的报警信息里面有许多具有 cluster=A 和 alertname=LatncyHigh 这样的标签的报警信息将会批量被聚合到一个分组里面

group_by: ['alertname', 'cluster']

# 当一个新的报警分组被创建后,需要等待至少group_wait时间来初始化通知,这种方式可以确保您能有足够的时间为同一分组来获取多个警报,然后一起触发这个报警信息。

group_wait: 30s

# 当第一个报警发送后,等待'group_interval'时间来发送新的一组报警信息。

group_interval: 5m

# 如果一个报警信息已经发送成功了,等待'repeat_interval'时间来重新发送他们

repeat_interval: 5m

# 默认的receiver:如果一个报警没有被一个route匹配,则发送给默认的接收器

receiver: default

# 上面所有的属性都由所有子路由继承,并且可以在每个子路由上进行覆盖。

[root@k8s-master alertmanager]# kubectl get pod,svc -n kube-system| grep -E "prome|alert"

pod/alertmanager-6778cc5b7c-jtwbt 2/2 Running 3 3m43s

pod/prometheus-0 2/2 Running 0 104m

service/alertmanager NodePort 10.111.52.119 <none> 80:32587/TCP 23h

service/prometheus NodePort 10.97.213.127 <none> 9090:32281/TCP 3h59m

[root@k8s-master alertmanager]#

我们这里配置了一个名为 webhook 的接收器,地址为:http://dingtalk-hook:5000,这个地址当然就是上面我们部署的钉钉的 webhook 的接收程序的 Service 地址。

然后我们也在报警规则中添加一条关于节点文件系统使用情况的报警规则,注意 labels 标签要带上group_by: ['severity'],或者根据自定义设置。这样报警信息就会被 webhook 这一个接收器所匹配:例如我们有两个报警alertname=CPU***和alertname=MEM***,webhook匹配一个,剩下的没有被匹配将使用默认的发出,我们这里是邮件

更新AlertManager 和 Prometheus 的 ConfigMap 资源对象(先删除再创建),更新完成后,隔一会儿执行 reload 操作是更新生效:

curl -X POST "http://10.97.213.127:9090/-/reload"

发送报警

隔一会儿关于这个节点文件系统的报警就会被触发了,由于这个报警信息包含一个severity的 label 标签,所以会被路由到webhook这个接收器中,也就是上面我们自定义的这个 dingtalk-hook,触发后可以观察这个 Pod 的日志:

[root@k8s-master alertmanager]# kubectl logs -f dingtalk-hook-686ddd6976-tp9g4 -n kube-system

* Serving Flask app "app" (lazy loading)

* Environment: production

WARNING: Do not use the development server in a production environment.

Use a production WSGI server instead.

* Debug mode: off

* Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

127.0.0.1 - - [20/Sep/2019 07:52:39] "GET / HTTP/1.1" 200 -

10.254.2.1 - - [20/Sep/2019 07:54:05] "GET / HTTP/1.1" 200 -

10.254.2.1 - - [20/Sep/2019 07:54:05] "GET /favicon.ico HTTP/1.1" 404 -

10.254.1.176 - - [20/Sep/2019 08:31:11] "POST / HTTP/1.1" 200 -

10.254.2.1 - - [20/Sep/2019 09:16:07] "GET / HTTP/1.1" 200 -

{'receiver': 'webhook', 'status': 'firing', 'alerts': [{'status': 'firing', 'labels': {'addonmanager_kubernetes_io_mode': 'Reconcile', 'alertname': 'NodeMemoryUsage', 'instance': '10.6.76.24:9100', 'job': 'kubernetes-service-endpoints', 'kubernetes_io_cluster_service': 'true', 'kubernetes_io_name': 'NodeExporter', 'kubernetes_name': 'node-exporter', 'kubernetes_namespace': 'kube-system', 'severity': 'warning'}, 'annotations': {'description': '10.6.76.24:9100内存使用大于10% (当前值: 15.369550162495912)', 'summary': 'Instance 10.6.76.24:9100 内存使用率过高'}, 'startsAt': '2019-09-20T07:06:10.54216046Z',

………

5.9 企业微信报警

https://www.cnblogs.com/xzkzzz/p/10211394.html

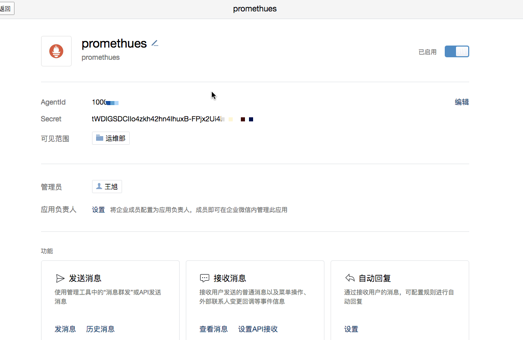

配置企业微信

登录企业微信,应用管理,点击创建应用按钮 -> 填写应用信息:

我们打开已经创建好的promethues应用。获取一下信息:

新增告警信息模板

apiVersion: v1

kind: ConfigMap

metadata:

name: wechat-tmpl

namespace: kube-system

data:

wechat.tmpl: |

{{ define "wechat.default.message" }}

{{ range .Alerts }}

========start==========

告警程序: prometheus_alert

告警级别: {{ .Labels.severity }}

告警类型: {{ .Labels.alertname }}

故障主机: {{ .Labels.instance }}

告警主题: {{ .Annotations.summary }}

告警详情: {{ .Annotations.description }}

触发时间: {{ .StartsAt.Format "2006-01-02 15:04:05" }}

========end==========

{{ end }}

{{ end }}

把模板挂载到alertmanager 的pod中的/etc/alertmanager-tmpl

[root@k8s-master alertmanager]# tail -25 alertmanager-deployment.yaml

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9093/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: wechattmpl

mountPath: /etc/alertmanager-tmpl

readOnly: true

resources:

limits:

cpu: 10m

memory: 10Mi

requests:

cpu: 10m

memory: 10Mi

volumes:

- name: config-volume

configMap:

name: alertmanager-config

- name: storage-volume

persistentVolumeClaim:

claimName: alertmanager

- name: wechattmpl

configMap:

name: wechat-tmpl

设置一个报警

在rules.yml中添加一个新的告警信息。

- alert: NodeMemoryUsage

expr: 100 - (node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes) / node_memory_MemTotal_bytes * 100 > 10

for: 1m

labels:

severity: warning

annotations:

summary: "Instance {{ $labels.instance }} 内存使用率过高"

description: "{{ $labels.instance }}内存使用大于10% (当前值: {{ $value }})"

配置微信发送

alertmanager中默认支持微信告警通知。我们可以通过官网查看我们的配置如下:

[root@k8s-master alertmanager]# cat alertmanager-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

# 配置文件名称

name: alertmanager-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

alertmanager.yml: |

global:

resolve_timeout: 5m

# 告警自定义邮件

smtp_smarthost: 'smtp.163.com:25'

smtp_from: 'w.jjwx@163.com'

smtp_auth_username: 'w.jjwx@163.com'

smtp_auth_password: '***'

smtp_hello: '163.com'

smtp_require_tls: false

route:

# 这里的标签列表是接收到报警信息后的重新分组标签,例如,接收到的报警信息里面有许多具有 cluster=A 和 alertname=LatncyHigh 这样的标签的报警信息将会批量被聚合到一个分组里面

group_by: ['job','alertname','severity']

# 当一个新的报警分组被创建后,需要等待至少group_wait时间来初始化通知,这种方式可以确保您能有足够的时间为同一分组来获取多个警报,然后一起触发这个报警信息。

group_wait: 30s

group_interval: 1m

# 当第一个报警发送后,等待'group_interval'时间来发送新的一组报警信息。

repeat_interval: 2m

# 如果一个报警信息已经发送成功了,等待'repeat_interval'时间来重新发送他们

#group_interval: 5m

#repeat_interval: 12h

receiver: default #默认的receiver:如果一个报警没有被一个route匹配,则发送给默认的接收器

routes:

- receiver: wechat

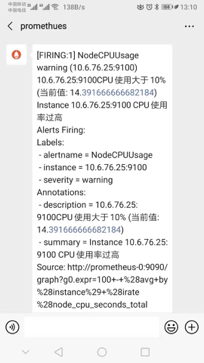

match:

alertname: NodeCPUUsage #匹配CPU报警

- receiver: webhook

match:

alertname: NodeMemoryUsage #匹配这个内存报警

receivers:

- name: 'default'

email_configs:

- to: '314144952@qq.com'

send_resolved: true

- name: 'webhook'

webhook_configs:

- url: 'http://dingtalk-hook:5000'

send_resolved: true

- name: 'wechat'

wechat_configs:

- corp_id: 'wxd6b528f56d453***'

to_party: '运维部'

to_user: "@all"

agent_id: '10000**'

api_secret: 'tWDIGSDCIIo4zkh42hn4IhuxB-FPjx2Ui4E0Vqt***'

send_resolved: true

wechat_configs 配置详情

- send_resolved告警解决是否通知,默认是false

- api_secret创建微信上应用的Secret

- api_url wechat的url。默认即可

- corp_id 企业微信---我的企业---最下面的企业ID

- message 告警消息模板:默认 template "wechat.default.message"

- agent_id 创建微信上应用的agent_id

- to_user 接受消息的用户 所有用户可以使用 @all

- to_party 接受消息的部门

加载相关配置文件

kubectl apply -f alertmanager-deployment.yaml

kubectl apply -f alertmanager-configmap.yaml

收到报警

版权声明:本文不是「本站」原创文章,版权归原作者所有 | 原文地址: